Neuroeducation, a growing field bridging the gap between neuroscience and education, has been instrumental to bringing tested brain science research into the classroom—and offering data that has provided educators with new tools to develop novel classroom techniques (as well as challenge outdated pedagogical doctrine). But what happens when neuroscience findings are taken too far?

In 2014, Paul Howard-Jones, a researcher at the Centre for Mind and Brain in Educational and Social Contexts at Bristol University in the United Kingdom, cautioned that neuromyths, or the “misconception generated by a misunderstanding, a misreading, or a misquoting of facts scientifically established by brain research to make a case for use of brain research in education or other contexts” are more pervasive in the educational field than we might think—and that these neuromyths may work against educational achievement.

In a survey of educators across the United Kingdom, the Netherlands, Turkey, Greece, and China, Howard-Jones found that teachers were quite susceptible to neuromyths, including the idea that humans only use 10 percent of their brains and that children are less attentive after consuming sugary snacks. The results were published in a Perspectives piece in Nature Review Neuroscience in October of 2014.

“There’s been a growing awareness of the fact that these scientific misunderstandings have proliferated in the educational community, and they’re quite widespread,” says Howard-Jones. “There’s generally a seed of truth underlying all these myths when you dig into them and try to understand where they come from. But they’ve been quite distorted and that’s troubling.”

Howard-Jones’ survey did not include educators in the United States, though he hypothesized that researchers would find similar results, as such ideas have become quite commonplace across the globe. So perhaps it is not a surprise that, in 2017, Lauren McGrath, director of the Learning Exceptionalities and Related Neuropsychology (L.E.a.R.N.) lab at the University of Denver, and then graduate student Kelly Macdonald, now at the University of Houston, found that US educators were similarly prone to endorsing common neuromyths. Their results were published in Frontiers of Psychology in August 2017.

The researchers had hypothesized that having some background in education or neuroscience might help buffer teachers from believing in common neuromyths. While it did to a certain extent, they found even teachers with high exposure to neuroscience still endorsed them at fairly high rates.

“Unfortunately, communication between neuroscience and education is difficult because of vast differences in training backgrounds. Myths can grow out of these miscommunications. You end up with very simple explanations for different aspects of learning, which we know from experience is quite complex,” she said. “It’s important that educational training, perhaps in an educational psychology course, tackles these myths head on, explaining where they come from and why they aren’t true.”

In the attempt to do just that, here are a few of the common neuromyths—with a discussion of where the “seed” of the myth comes from as well as what the neuroscience findings they are based on can really tell us about human cognition and performance.

Neuromyth #1: Humans only use 10 percent of their brains.

Where It Comes From: The origin of this particular myth is the matter of some debate. Some have argued that it hails from William James, the father of American psychology, who, in an essay titled “The Powers of Men,” wrote, “as a rule men habitually use only a small part of the powers which they actually possess and which they might use under appropriate conditions,” and “we are making use of only a small part of our possible mental and physical resources.”

Others link this neuromyth back to so-called “silent cortex” theory. When neurosurgeons first started stimulating parts of the brain with electrodes more than a century ago, they found only about 10 percent of the cortex resulted in visible muscle twitches. This led those researchers to conclude that the other 90 percent of the brain was “silent,” or “uncommitted” to a particular cognitive function. Further studies by Karl Lashley found that rats could learn specific tasks even after large parts of their brain had been removed—which appeared to support the idea that the brain wasn’t being used at its full capacity. Today, the 10% myth persists, with blockbuster movies like “Lucy” helping to perpetuate it. But it isn’t limited to popular culture and sci-fi flicks: approximately 50 percent of the teachers Howard-Jones surveyed about neuromyths agreed that humans only use 10 percent of their brain capacity, as well.

The Reality: As neuroscientists developed newer and more sophisticated tools to look at brain function, they learned that the cortex is far from “uncommitted.” Marcus Raichle, a neuroscientist at Washington University in St. Louis and a member of the Dana Alliance for Brain Initiatives (DABI), was one of the first scientists to suggest that, even at rest, the brain is working at full capacity. Since then, most neuroscientists have accepted that the brain has a so-called “default mode,” a sophisticated network of brain areas that remain active even when the brain is resting.

“When I’m asked what the brain’s job is, if I can sum it up in one sentence or so, I always say the brain is in the prediction business. We’ve learned that it’s always on—and most of its energy is devoted to trying to predict what’s going to happen to you next,” says Raichle. “And I don’t see how the brain could be in the prediction business if it was working at only 10 percent capacity.”

Neuromyth #2: Eating sugary snacks results in hyperactivity and reduced focus and attention.

Where It Comes From: As researchers study the effect of diet on cognition, one thing is becoming abundantly clear: diet matters. Understanding how, where, and why, however, remains a bit elusive. In the 1970’s, many researchers believed that sugary foods and food additives were linked to cognitive deficits—particularly in school-aged children. Several correlational studies showed a link between sugar intake and hyperactive behavior. These results were only fueled by parental and teacher anecdotes. They consistently reported that children are less attentive (and more active) after consuming sugar. Even today, if you offer elementary schoolers a cookie near bedtime, you’ll likely get an earful from a parent about how that sugary snack will only rile them up.

The Reality: This particular neuromyth has been around for quite some time—and Harris Lieberman, a researcher who studies diet and cognition at the U.S. Army Research Institute of Environmental Medicine, says that despite several studies debunking it, it still remains a popular belief among both parents and educators. It’s a case where anecdote seems to have a stronger pull than sound scientific experimentation.

“For some reason, nutrition and behavior generates a lot of mythology. But in the controlled studies that investigated whether sugar versus placebo made children more hyperactive and interfered with their ability to concentrate, it’s clear that sugar was not linked to hyperactivity in kids,” he says. “But it’s very difficult to convince people once they think they are observing a relationship that it doesn’t exist, regardless of how many scientists say so and how many studies have been done.”

Neuromyth #3: Hemispheric dominance (whether you are “left-brained” or “right-brained”) determines how you learn.

Where It Comes From: In the 1960’s, Roger Sperry, Joseph Bogen, and Michael Gazzaniga undertook what are now known as the “split-brain” studies. The group studied patients, usually epileptics, who had undergone a surgical procedure that severed the corpus callosum, or the white matter neural fibers that link the two hemispheres of the brain. The group discovered that this procedure resulted in some striking hemispheric differences on cognition. Gazzaniga, in an essay written for Nature Reviews Neuroscience about his split-brain research, says, “Nothing can possibly replace a singular memory of mine: that of the moment when I discovered that case W.J. could no longer verbally describe (from his left hemisphere) stimuli presented to his freshly disconnected right hemisphere.” The group went on to demonstrate that severing the corpus callosum in its entirety blocks interhemispheric communication—influencing a patient’s ability to perceive and describe information, depending on which side of the brain it was presented to.

More than four decades later, the split-brain work has undergone a metamorphosis in popular culture. It has been co-opted to describe visual and verbal learning styles, as well as different personality types. Books and popular periodicals argue that “hemispheric dominance,” or which side of the brain is more active, tells us about who we are as people. That “left-brainers” are your more analytical types, while “right-brainers” are more creative and expressive. And today, you’ll find all manner of educational books instructing teachers on how to harness the two different hemispheres to encourage optimal learning in the classroom.

The Reality: Gazzaniga, now director of the Sage Center for the Study of Mind at University of California Santa Barbara (as well as a DABI member), says he couldn’t have predicted that his split-brain work could have become such a part of popular culture when he started the work more than 40 years ago.

“It took off and really had its own life,” he chuckled. “And it makes sense if you think about it in terms of a very easy way to explain what you knew about brain mechanisms and cognitive abilities. But it’s overly simplified and overstated.”

Gazzaniga says that the split-brain work has become “mixed up” with sound psychological and educational work that demonstrates that children use a variety of cognitive strategies to solve problems. “There are some kids who visualize problems and other kids who verbalize them. And some educators use those terms, visualizers and verbalizers,” he says. “That reality has been mapped on the right brain/left brain anatomy as an explanation. But that’s where it falls down. Because the actual neural mechanisms for how these cognitive strategies work are much more complex than that. Cognition, in general, is much more complex than that. That’s what we’ve learned over the years and continue to learn as we study hemispheric differences. It’s all just a lot more complicated than we ever thought.” (Learn more about right brain/left brain reality in our fact sheet on the topic.)

Neuromyth #4: Teenagers lack the ability to control their impulses in the classroom.

Where It Comes From: Adolescence is often discussed as a time of storm and stress. Over the past few years, numerous neuroscientists have tried to explain why teenagers have a penchant for risky and often impulsive behaviors—a trait that is usually outgrown by the time they reach adulthood. Larry Steinberg, a researcher at Temple University, has demonstrated that critical connections between the motivational centers of the brain and the prefrontal cortex, the brain’s executive control center, do not fully mature until after adolescence—suggesting that there is a lack of coordination between two key brain systems that results in poor impulse control. This dual-systems framework has led many to suggest that the teen brain renders adolescents unable to self-govern—and teachers should take this into consideration when trying to manage middle and high school classrooms.

The Reality: Steinberg’s dual-systems framework has offered neuroscientists great insights into how the brain matures and develops—but Abigail Baird, a neuroscientist at Vassar College and DABI member, says we need to be careful that we aren’t taking this idea of poor neural coordination too far.

“People want to say, ‘Oh, this boy can’t self-regulate because his frontal lobes aren’t mature yet.’ These assumptions make us prone to lowering our expectations beyond what is useful. One of the easiest ways to debunk the neuromyth that adolescents can’t self-regulate is to simply watch them with their peers,” says Baird. “Because in peer-related settings, we do see adolescents self-regulate wonderfully. They do it all the time. Think about it: most teens would never violate something that their peers thought was uncool. They don’t use outdated slang or a lame emoji. They are constantly checking themselves against what they should and should not be doing socially, you know, the things that are most important at this age.”

According to Baird, despite a lack of maturity in critical frontal lobe connections, even young children can self-regulate when motivated. But children tend to do so where they see rewards or direct benefit to themselves. And teachers can help teens self-regulate by making lesson plans more interesting and relevant—and by imposing consequences for inappropriate behavior.

“One important function of adolescence is having and acting on new, and even potentially risky, impulses so you can make mistakes and learn from experiences before you are expected to act like an adult. It’s really quite functional. Not having extraordinary self-regulation enables some developing teens to gain experience and this experience ultimately helps the frontal lobe mature,” she says. “But until then, adults and teachers can help teens out by acting as an external version of that frontal lobe system. We can allow them the space they need to question and rebel and figure things out while also making sure they understand that there are consequences when those behaviors go too far.” (Read more about teen behavior in our briefing paper, “ A Delicate Balance: Risks, Rewards, and the Adolescent Brain .”)

Neuromyth #5: Students learn in different “styles.”

Where It Comes From: Over the past few decades, there have been many attempts to classify how students learn–and whether individuals are better adept at receiving new information if it is presented in one modality over another. The concept of “learning styles” gained momentum in the 1990’s, when a classroom inspector in New Zealand, Neil Fleming, proposed that different students were more likely to excel in school if they were working in their preferred mode of learning. That is, some students learn better when material is presented in a particular format–visually, aurally, in a written format, or kinesthetically (VARK). Fleming’s theory held trying to change an individual’s learning preference was nearly impossible. Rather, teachers could find more success by identifying a student’s preferred style and then providing new material in that modality. Since then, the concept of “learning styles” has spread far beyond New Zealand’s borders and has become very popular across educational circles.

The Reality: While there is little doubt that individuals may learn new concepts in different ways, Polly Husmann, a professor at Indiana University who studies how factors outside the classroom can affect learning, said there is scant evidence to support the idea that each student has a distinct learning style.

“It might be better to describe them as learning preferences–or the way we prefer to receive information because it comes easier to us in that format,” she explained. “Even so, when you are trying to learn something new, easier is not always better. If you get things in a preferred style, it may not be challenging enough for you to really process and understand.”

Husmann cites studies that show adhering to styles doesn’t make a difference in learning outcomes. When you present material in one modality to half the class and in another to the other half of students, there is no discernible difference between the two groups. Even in higher education, where it is expected students will have some awareness of the ways they learn best, Husmann’s own research shows that students do not tend to study on their own using methods that support their supposed learning style–and those that do study in line with it don’t fare any better when tested on the subject matter.

“I think it’s important that we refocus the conversation on how people learn–it’s not about trying to put people on boxes but to look at how people learn more broadly than a few broad categories,” she said. “When we can frame the discussion more about learning in general, instead of these specific styles, it opens teachers up to new possibilities, where they can try many different things to help their students succeed.”

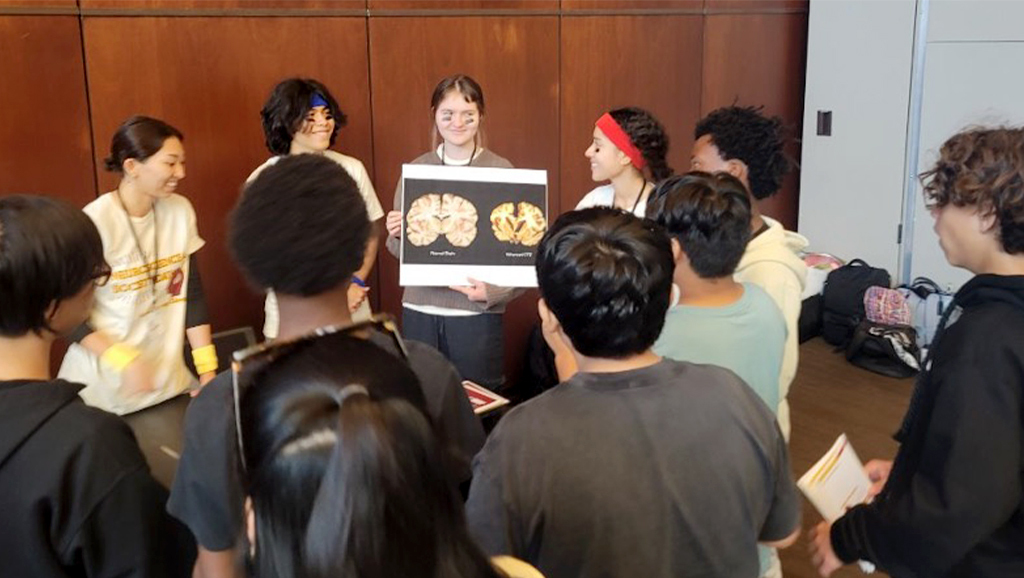

The Need for Communication and Collaboration

Howard-Jones says that neuroscience will continue to inform the educational field moving forward. And because of its relevance, it’s even more important that neuroscientists and educators work together in the future—focusing on problems that assist teachers in the classroom environment in a scientific manner. McGrath and Macdonald note that their study shows that training can reduce but does not outright eliminate the endorsement of neuromyths. That’s why, they argue, it’s so important that both educators and neuroscientists find ways to communicate, collaborate, and bridge gaps in understanding.

“It’s important to emphasize that these myths have become so popular because teachers have such a sincere interest in understanding how their students learn–something we know is inherently complex,” said McGrath. “There’s an appeal to many of these explanations because they sound scientific–but they’ve been overgeneralized, oversimplified, and, more and more often, marketed directly to educators as a way of improving what they can do in the classroom. It’s important that we come up with trainings that work to directly dispel these myths so teachers can focus on solid evidence-based recommendations for their classroom.”

Howard-Jones agreed, adding that the attempts to find ways to better communicate between the fields of neuroscience and education are happening more and more–but it is important that the two disciplines can speak using common vocabulary and concepts to prevent the misunderstandings in which neuromyths are born.

“I want to emphasize this isn’t about teachers being silly. It’s about a real challenge that scientists and educators need to step up to meet,” he said. “And it’s a challenge that will require collaboration, more neuroscience education in teacher training and the co-construction of messages by both teachers and scientists about what these sorts of findings really mean in an educational context.”